At CES this week, NVIDIA unveiled new Physical AI tools that fundamentally change how robots are trained – shifting intelligence development from the real world into simulation. As robots begin learning in realistic virtual environments before deployment, it’s clear that they need something very familiar to humans: a kindergarten.

The Robot Kindergarten

Robert Playter, the CEO of Boston Dynamics, made a very astute observation on 60 Minutes. He noted that if people could see just how much effort goes into getting robots to perform even the simplest tasks, they would rest easy knowing that a “Terminator" scenario is not happening anytime soon.

This perfectly encapsulates the current reality of robotics. The image painted by sci-fi movies, and our expectations of advanced robots like Boston Dynamics’ Atlas, 1X’s Neo, or Elon Musk’s Optimus, is still far removed from how intelligent or humane robots actually are today.

Photo credit: NVIDIA

Robots are largely capable of performing pre-trained tasks, and they cannot deviate much from what they have learned. Often, behind a dazzling marketing video, lies the reality that the robot was being teleoperated by a human. In the end, the robot's brain was actually a human brain.

But this is changing rapidly. Robot training is currently one of the hottest topics in technology. Industries are looking to improve efficiency and quality with robotics, while autonomous traffic systems are looking to solve driver shortages with AI-driven vehicles, which are essentially robots.

What is a robot, really?

When we talk about robots, we come in many shapes and sizes. There are the familiar orange KUKA arms in car factories, but there are also humanoids walking on legs. If a harbor crane is automated by AI, is that not also a robot in a certain sense? Robotics is pushing into our daily lives in ways we don't even realize.

The common denominator is that all these robot "brains" need to be trained. Traditionally, this has meant programming specific movement paths and having the robot repeat them endlessly on an assembly line. In a way, these were just pre-recorded events.

The new curriculum: Physical AI

NVIDIA has completely revolutionized this field by introducing Physical AI. They have done immense work to ensure robots can be trained in fully simulated, physically realistic environments. This allows a robot to attempt a task thousands of times to learn it.

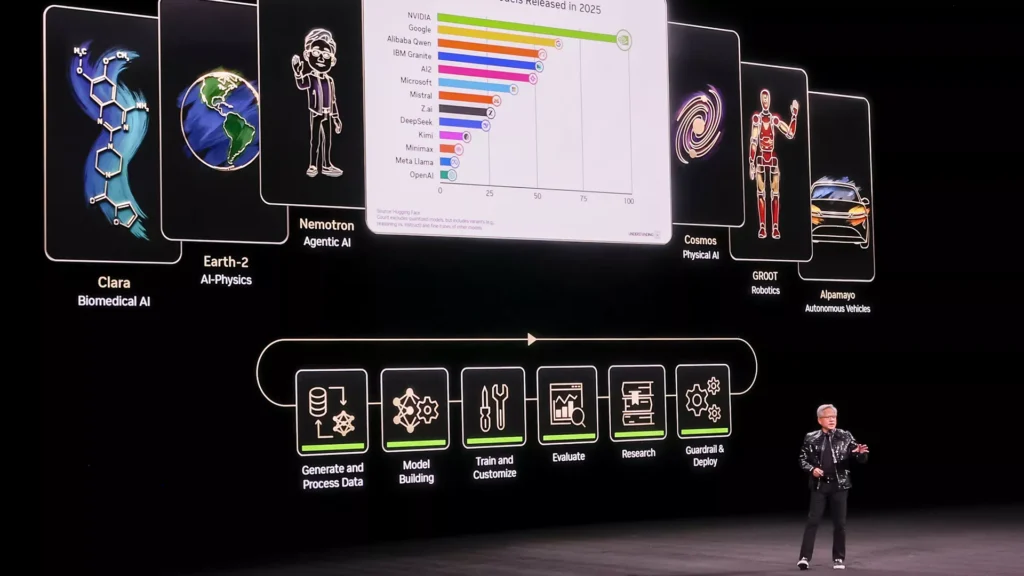

Photo credit: NVIDIA

It is faster, cheaper, and safer to train a robot in a simulation first. Once it has learned enough, or once it has graduated, it gets to try its skills in the real world.

The training methods have evolved significantly:

- Reinforcement Learning: This is the original game-changer, the "carrot and stick" approach. A neural network is given a reward function, meaning a goal such as "try to stay standing." The robot then begins to learn what kind of legs, arms, and servos it has, and how to use them to achieve that goal. This is simulated thousands of times in NVIDIA’s Isaac Lab. The result is a "skill" that allows the robot to stand.

- Mimicry and Teleoperation: For broader and more complex sequences, such as "put the apple in the basket," Reinforcement Learning isn't always enough. Here, we teach by mimicking. A human operator uses their own body to pantomime the movement (teleoperation). The robot mimics this. Once the human has demonstrated it enough times, the neural network simulates it 1,000 more times, creating a robust skill for apple-picking.

New Robotics Educational Tools from NVIDIA

At CES in Las Vegas earlier this week, NVIDIA introduced a new suite of tools that act as the textbooks and senses for these robots:

- NVIDIA Cosmos™ Transfer 2.5 and NVIDIA Cosmos Predict 2.5: Open, fully customizable world models. These enable physically based synthetic data generation, allowing robots to train in simulations that mirror the laws of physics perfectly.

- NVIDIA Cosmos Reason 2: An open reasoning Vision Language Model (VLM). This acts as the robot's "common sense," enabling it to see, understand, and act in the physical world like a human.

- NVIDIA Isaac™ GR00T N1.6: A model purpose-built for humanoid robots. It unlocks full-body control and utilizes Cosmos Reason for better context understanding.

These tools accelerate robot training to a new level. We now have pre-trained AI models that explain the world, tasks that help robots learn new things, and generative models that simulate millions of different contexts and situations.

Younite AI - nurturing robots for the real world

At Younite AI, we are currently focusing heavily on this Robot Kindergarten. We are leveraging NVIDIA’s Physical AI capabilities to teach various robots to perform value-adding tasks for our clients.

Just like in a kindergarten, our goal is to create a platform that nurtures individuals to thrive in the real world.

About the author

Sami Heinonen

Sami is a visionary technology leader and innovator with 30+ years of experience in the internet and immersive realities. As CTO of 3D/XR at Younite AI, he specializes in NVIDIA Omniverse and XR, bridging cutting-edge technology with real-world use cases.